Dev:SynthJS

|

| Deployment: http://lqkhoo.com/synthjs/index.html (alpha) |

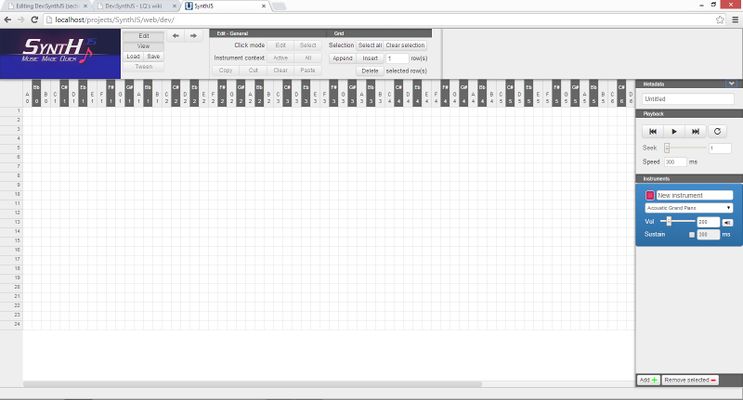

SynthJS

SynthJS is an application to let users to quickly synthesize their favorite tune within the browser with little to no musical knowledge, and let them turn their new creation into a rhythm game. I want to build something that people can both use to do something worthwhile and to play with (if they want to), and the interesting thing about it is they get to play with their own creation; the application is a constant but their experience is as individual as they want to make it.

This is still very early in development - most of the features and parts of the GUI haven't even been implemented yet!

I've had this idea of a music synthesizer / rhythm game for a while, but I wasn't confident enough in execution (JavaScript, mainly) to start it yet, plus I'd had thought there would be something like this out there already. There's tons of HTML5 audio projects out there - this article lists more than a few, but I couldn't find anything like what I wanted to make (SynthJS). So, I thought I might as well start now and throw myself into the deep end and see how far I can take the idea and hopefully learn a few things along the way.

Currently it's built on top of libraries like MIDI.js, with Backbone.js for models and jQuery for the DOM, but this may well change since this is very early in its development. This is especially the case for the music libraries as they're all fairly new.

Roadmap

The first phase is to get the synthesizer to a reasonable state as that's the original core idea. If I don't run into performance or programming brick walls then I'll start looking at serializing the data (should be straightforward) and making a compatible rhythm game out of it. Getting SynthJS to output audio files is possible (there's this project doing it already), but not a priority.

Synthesizer / sequencer | SynthJS - Music made quick

- Instruments

- Allow add/edit/delete instruments {Done}

- Playback

- Implement volume controls {Done}

- Add additional instrument sounds {Done}

- UI

- Add note highlighting based on selected scale

- Usability

- Implement undo stack + associated command classes {Done}

- Add sound preview for instrument {Not feasible - too expensive on memory as MIDI preloads everything}

- Copy squares / rows / columns of grid

- Add transparency slider for inactive instrument layers

- Add vertical grid options - black key shading / by scale

- Add horizontal major and minor gridline options

- Capability

- Add waveform editor (ADSR envelope only) {?}

- Add serialize-to-JSON capability {Done}

- Add load-from-JSON {Done}

- Have a way of specifying play duration per note {Done}

Other optional but useful requirements I can think of I'll add below:

- Performance directions:

- Ornament sub-grid - let users specify special treatment for specific note heads

- Volume tweening

- Sustain-duration tweening

- Tempo tweening

Game | SynthJS - Music played quick

- We'll see

Luxury

Features which are not planned but I might consider if I somehow have too much time on my hands. These are anticipated to be very expensive in terms of man-hours or do not directly contribute to what I set out to make SynthJS into, but would be seriously impressive or make the application useful in other ways if pulled off, and that's a big if.

Main problems now

- Tuples

- I still have to figure out a way to elegantly specify tuples. My current train of thought is to specify a special container for an array of note objects, and change NoteCollection to a polymorphic backbone collection. This container is aligned to the grid, but the notes within are not - MIDI.js can play these back just fine. Distributing the timings is trivial. No, the problem comes from ties, specifically ones that tie from a note in a tuple to one outside, or inside yet another tuple. Currently I sidestep the issue by just extending the note by however many units of grid I like. I can fudge it by adding a reference field from a note to another note, and if they're tied I add the value of the 2nd note to the first and set the 2nd note to not play, but that's just ugly, but I'm really wondering if there's a better way to do it. {Solved} - #Design consideration 03 - The timing model

- Default soundfonts

- They are decent, especially the piano ones, but are lacking in certain areas - brass / violins etc don't play for longer than 2-3 seconds. If I want any serious playback capability beyond just piano, I need to do something about this, like building my own soundfonts ...

- Staves and engraving.

- Originally I set out to build this into SynthJS with the requirement that I find a WYSIWYG framework for music engravings - believe me, I looked - there is none. The closest thing I found was VexFlow, but it's a rendering suite, not an editing one, so I settled on a grid-based "notation". It's equivalent, it's just not as elegant or compact. To go from grid to engraving the model needs a couple of extra things at the bare minimum - the time signature, what the value of each grid is (one semiquaver etc.), and key signature. For instruments with two staves like the piano, one needs a method to specify which notes belong to which stave. There doesn't appear to be a way to specify rendering two staves in VexFlow, although LilyPond supports it (of course).

Similar projects

- Theres loads of HTML audio stuff going on. I'll list the interesting ones here when I get time.

Design decisions / considerations

The application is built upon several core models:

| Orchestra | The top level singleton model handling all the playback and containing all other models concerned with music-playing |

| Instrument | The model for an instrument. Contains a number of Beat instances as well as other parameters |

| Beat | The model to handle each time value. Contains an array of bools for each pitch (88 of them), as well as other parameters |

Design consideration 01 - The grid

After I got the grid functionally working, it's time to optimize its performance. Initially I misidentified the cause of the performance issues as my models, but it turns out it's going to be the DOM that's causing the most problems. You see, the application is essentially a giant grid.

- Let m be the number of instruments

- Let n be the number of beats in the piece of music.

The grid is 88 columns wide (there are 88 keys on a piano), n rows down, and m instruments (layers) deep. We're looking at 88 * n * m divs minimum if we generate the grid naively, which is what the implementation was doing.

The implementation at this time of writing is not using HTML5 web workers to generate the audio output (if it comes to that because of performance issues later then I'll do it). Why did I initially guess the performance bottleneck to be with my models? When the user hits play, the application scans all 88 frequencies per instrument to decide what to play. It then generates Timbre.js objects on the fly, passes them through a Timbre.js Adder (These perform an arithmetic sum on the generated sound oscillators + envelopes).

That's the initial performance concern. I was tweaking the grid headers as well - initially they were generated along with the grid, so if the user scrolls away from the initial position, they become hidden. I thought of a 'floating' them (CSS sticky when it finally gets implemented) via JS but bearing in mind the event handlers in place, I wanted to just have one set of headers for all instrument layers, so I moved them out. After solving CSS problems (The top bar has to scroll with the grid but stay static), it looks all nice and works the same, but I still haven't solved the performance issues yet.

I had an idea of letting users limit the instruments' range to reduce the 88 * m * n space since an instrument would probably use less than 33% of the 88 frequencies, usually -- most HTML5 audio projects I've seen out there let the user play with between a dozen to two dozen frequencies at most -- and I'd just let this be set on individual instruments. All it needs is an additional field per instrument masking out those frequencies - during playback, before creating the Timbre objects, perform a boolean AND on the mask and the registered frequency to decide whether to play it or not. That's to target the potential bottleneck being generating those objects. Seems like a good direction to optimize (it really does shave off a lot of that space) but turns out this wasn't necessary.

There's another way, which is to cache which frequencies are actually active at any time and only scan those values -- that's if the bottleneck is fetching all those Backbone Beat instances and arrays, although I thought this is pretty unlikely to be the issue.

Halfway through solving the CSS issues, I decided to hide parts the grid to troubleshoot z-indices and layering problems, but I had some notes registered so I hit play anyway. Funnily enough, there were no problems playing about a hundred notes simultaneously. So I had a look at the grid and decided I might as well streamline it now since I pinpointed my bottleneck.

I made sure of one thing - I tried setting the elements to display: none to remove them from the DOM and stop the browser rendering them, and this doesn't cause any problems even when there's tons of them on the DOM, so this is going to be my approach - hide the squares until they become active. To remove the grid, first I redefined my CSS for the squares to be absolutely-positioned based on their frequency (They were floated left before -- yeah, I know). Since there are 88 frequencies max, I just created 88 CSS definitions.

I settled on an in-between event catcher layer. Initially I opted for a blank div to capture mouse click coordinates (like thus), and then map them down to the active instrument, but then I realized, without the squares, there's no grid!. So, I'm going to populate this in-between layer with two layers - one with 88 divs going down, one with m rows Backbone-mapped to the number of beats tracked by the Orchestra singleton. This way, I get a grid for 88 + n divs instead of 88 * m * n. I'll just have to define an opacity filter and/or color difference for non-active instruments to differentiate between active and inactive instruments. I checked for a way to trigger events on overlapping items so I don't have to calculate coordinates, but since these neither of these divs are parents nor children of each other, it's not possible to make use of event bubbling /propagation.

{Edit}: Actually, I just thought about the fact that I could get a grid by simply pre-rendering it as a single-square PNG and setting it to background-repeat! Do I do it? Nah, it's cheating =p Maybe at the end to eke out that last drop of performance.

Design consideration 02 - The music model

Up to this point the project is essentially a huge spike - a lot of the work is to discover performance bottlenecks and figuring out how to do things. Now, with most of those out of the way, it's time to tackle the main problem how to represent the music.

Right now the model is what I call the music box model. The music is divided into a number of beats, and an instrument either plays a particular note on that pulse or not - much like the rotating drum and pins of a music box. What it does not let me specify is how long the note needs to be played. While this is perfectly fine for percussive / plucked / struck instruments like the guitar or to a certain extent the piano, this will not do for woodwind / brass / strings / vocals at all.

Now, if I want any decent chance at making it into a rhythm game, or have a way of representing a good number of pieces reasonably accurately, this needs to change, and since this is the core of the application, the change needs to be now. It's stopping me from working on the actual sound-producing features like instruments and the envelope editor because if I were to change this later it would be throwing all that work away. Even now, it means rewriting the whole grid, so this is going to take a good few days.

Instead of an array of Beats, Instruments are going to hold a fixed 88 instances of Backbone collections of Pitch(es), and each one of these would have any number of Note(s). These notes would have their id property as their starting time, and a duration property in terms of the number of smallest time intervals. Model-wise this needs another element to work - a boolean array specifying which time intervals are open for that frequency - you can't play the same note if again if the previous one is still sounding. If it's closed then the proper Note to edit can be found by walking backwards until the first Note is reached - this is the note which is playing more than one unit of time. Presentation-wise Backbone needs to sync absolutely positioned divs - their left property, and their width property. It's the same when deleting time intervals. However, if the root note is deleted, then the array needs to open up the time intervals. When playing, the loop gets all 88 collections and tries to fetch the notes by the id of the current time interval. If there's no note, that frequency is simply not played.

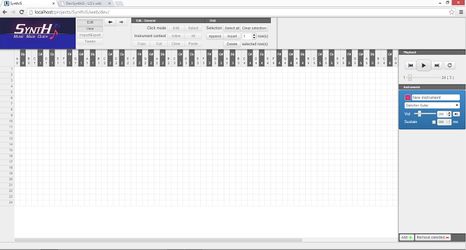

While I'm at this I'll take the chance to transpose the grid - time goes right, pitch goes down, and I'll add a checkbox for each frequency to let users disable them, and these objects are not scanned during play - it's very unlikely for an instrument to play more than 3 - 4 octaves.

That's the current plan. Will update if anything changes or if I overlooked anything.

Design consideration 03 - The timing model

I was perplexed at how to represent tuplets of all kinds in the grid, so I've had a brief look at some digital audio workstation software, specifically their sequencer components, and I found that they sidestep the issue by making use of something called quantization. They allow users to specify, for each beat, what the divisions are for that beat - so say we are in a time signature of 4/4 and we want a quaver with three semiquaver triplets in one single beat, then what you do with quantization is that you split that beat into /24 (/4 for each beat, then subdivide that beat by 6 - and we get /24), and it's a simple matter of dragging that note div out to the required length. For the example scenario then the quaver would occupy three sixths, and each semiquaver triplet member would occupy one sixth.

If there are complicated rhythms like cross-beat triplets then you simply convert all the beats involved to whatever quantization which is the lowest common multiple of all those notes.

So, the timing model planned - the Orchestra owns a collection of Bar(s), each of which owns a collection of Beat(s), each of which owns a collection of Quantum (Quanta). A note would have the reference to the Bar it starts in, the Quantum it starts on, and the number of Quanta it is held for. I'm expecting all this to take quite a few full days of work to get done properly, and I'll update here if I overlooked anything.

Diary

Mar 10

I haven't committed any new code as the other projects still have priority, but in the meantine, I've had a good look at Kinetic.js as a solution to the problems the grid is having. Divs seem to have a problem in scalability, but the main problem with a canvas is that it's difficult to achieve the same sort of MV* binding that I'm looking for (that I'm currently using with Backbone Modelbinder). I have to give this some more thought and do a spike or two before settling on a solution.

Feb 27

Status update -- been jamming some mad hours into my research project, but I'm likely to get some reprieve by Friday to work on this one.

{Edit} Nope, looks like no reprieve for me. Got loads done but have still more to do. This project will be sidelined until I finish up most of the other two coursework projects which are due in 2 and 4 weeks.

Feb 23

Transposed the grid, so time goes to the right. This is in preparation for some extensive rework on the timing model in order to support tuplets properly. See #Design consideration 03 - The timing model

Feb 22

I got the UI operations standardized today - the init/render declarations were a bit messed up and some views were re-rendering unnecessarily during a new load operation, so I took some time to clean things up. Now, upon loading new data, both the current top level view and controller are deleted, and then a new top level view refreshes as if the application is just newly initialized. Then I initialize the models and finally call render() on all the views to bind them properly. That took me a while.

Also, I got load to file and save to file working, using Blob.js and FileSaver.js. I was working to get localstorage up and running but found it to be only marginally useful, so I decided to abandon it -- firstly it's on a per-browser basis but that's kind of a given. Secondly, if the user has any kind of cleanup software like CCleaner then it will probably wipe the localstorage as well unless the user sets it as an exception, so I'd rather save the user the headache and grief, as modern browsers (which is what I am targeting) support both the file API and localstorage.

Priority now is to render the logo as a PNG since it's borked in anything but Chrome thanks to the italic @font-face embed, and to finish up the remaining grid operations, so I can deploy this as version 0.1. After that then I can start looking into the more difficult features like ornamenting / tuples / subgrids, tweening, and VexFlow rendering.

Feb 21

Ok, got the functional programming coursework out of the way, and made quite some headway on the research project, so I'm now back to this. Got serialization fully working today, so the whole Controller class gets serialized into a JSON string to be stored and is able to be reconstructed again. Main problems were the backbone views not rebinding onto the models, but I got this one squarely done now. Now I need to look at file storage and local storage. Local storage should be straightforward. Create a field called SYNTH and store the JSON under the music's given name. For files I need to have a look at FileSaver.js.

Feb 17

Added playback speed UI element, did some work on serializing the data. Backbone.js by default serializes all model attributes and this was giving me a non-descript error message in Chrome's console like this: <error>. This had me puzzled for quite a bit, but then I fired up firebug and it said something much more helpful -- Too much recursion. This immediately led me to suspect that Backbone was resolving all the circular references I had in my models. I had to look up Backbone.js's source for toJSON, since it's not in the documentation (great..) and it turns out it's just a simple call to _.clone(this.attributes) where this is the model.

I had to create an abstract model which overrides this toJSON method, which takes into account me specifying transient properties via a transientAttrs attribute within the model definition. Any model attribute specified within this property would be ignored by my version of toJSON, and it got Backbone to serialize.

Now that I got the data to serialize, I can work with raw JSON strings for now, but eventually I want to give users the option to save to the local session (if available), or otherwise to a JSON file. This seems to require even more plugins (StackOverflow pointed me to FileSaver.js, and Blob.js), but I'll leave those for later. Priority now: Get load/save fully working to and from local storage and via raw JSON.

Now I have my research project to look to so I have to wind this down a bit over the next couple of days until Friday, but I really want to get this done quickly.

Feb 16

Wow this update took seriously long. The main problem is the undo/redo stack - deleting and adding beats aren't trivial operations and it involves information loss - when deleting a beat, if a note overlaps it then its value needs to be subtracted by one - but when restoring it, the beat may or may not have been truncated - there's not enough information to resolve that.

There's two methods to implement an undo/redo stack - one is the current implementation, which is to perform the logical reverse, but when there's information loss, there's another option - save the previous state of the working area. I initially substituted underscore.js with lo-dash since it has a deep copy option, but I found that to be way too expensive. Deleting just three beats took about half a second as the browser frantically deep copies hundreds and hundreds of nested attributes and objects, so that's unfeasible. By then I know that I need a custom-built method of state representation.

Hence a lot of this work is to create helper classes and methods to facilitate an even more powerful Command framework. Non-trivial operations produce a so-called blueprint object - this is an array encapsulating a series of pre-constructed sub-command functions which are executed in strict ordering as one single Command. When undoing, their logical reverse is executed, in strict reverse order. Now that's a lot of work to add and insert rows -.-' .. but at least now I have the framework to represent just about any permutation of operations on the grid if need be.

I spent some time yesterday planning out some of the menus up top - there's a whole load of buttons there now but most of them aren't functional yet. And I've refactored how SynthJS detects whether a given time is occupied by a note or not, and finding the closest note (if it exists) before or after that given time. It was walking the time array one by one before (!!) as a naive implementation, so say if the music is 500 squares long and we query the last square for the last note before it, it will actually usually walk all 500 steps backwards since most pitches are empty anyway. Now it's doing binary search using only note start times so it's independent of the number of squares (and instead depends on the number of notes), and it's O(log(n)) time at that, so I don't suppose it can get any better than that.

Most important thing now is to enable selection of notes either via rubber-band or via row-column intersection, oh and playback speed - I've kind of ignored that for a while - it just needs a UI element that's all. The undo/redo buttons need proper icons. Once copy/paste/clear is done then v0.1 would be ready for deployment.

Feb 14

Right, got the player controls down pat. MIDI.Player is used to play when reading in data from MIDI files, but I'm generating notes on the fly, so I can't really use the built in play controls (or at least I couldn't find a way to). So I implemented an event queue and used window.setTimeout to time things properly, much like Midi.Player does on its own. Now that playback fully works, I need to do the most tricky bits - adding and deleting beats. It's tricky primarily because it interferes with play behavior - I'm trying to not disable editing whilst it's playing - one it's more work for me, second, I believe it can be done reliably if given enough thought. Plus I need to do custom checking for each note - if a note starts on a beat being deleted, delete it, otherwise if its value extends over that beat, reduce it by one. Don't really foresee any problems with it - that's the way I like it. That's it for today. It's very late.

Feb 13

Linked model to MIDI.js and managed to get playback. Most of today's work is getting the Instrument's model right - each one has an adjustable volume between 0 and 1,000 (MIDI's velocity), can be muted, and has a sustain option. If sustain is on, the playback model will ignore the note value and try to achieve the sustain duration, where the instrument allows (e.g. setting sustain to 10 seconds for a drum won't do much good, but for strings and piano etc. it works very well).

Add/remove instrument now fully works, but that's comparatively minor. Had to make a minor change to MIDI.js as it was creating a new AudioContext every single time an instrument is changed and the play button is hit. I've changed it to reuse the same one if one exists - since my browser was telling me there's a hardware limit of 4 AudioContext objects at any time.

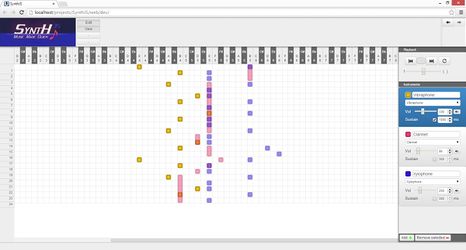

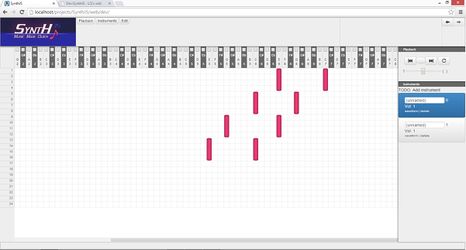

Very happy with today's progress. Screenie is a trial run of the first few beats of Mechanical Kingdom from Radiant Historia. Sounds decent enough without dynamics and tweening, and with the limited sound samples.

Next step is to get the playback panel fully back up and get the buttons to stop MIDI.Player from playing etc... and the UI to add more beats / delete them.

Feb 12

Had to fix more bugs with the grid but I think that's all of them. Tidied up the grid event handler code somewhat - bugs were caused by two off-by-one errors. Got grid select rows working, as well as add/remove instruments through the undo/redo stack. Had a look at MIDI.js - found a way to translate the current music model to it - MIDI can play on multiple audio channels - I'll need one for each individual instrument. Each one of the 88 notes are played as:

MIDI.chordOn(<channel: int>, [MIDI.pianoKeyOffset, .. ], <velocity: int>, <delay: float>); MIDI.chordOff(<channel: int>, [ .. ], <delay: float>);

MIDI.pianoKeyOffset simply returns an integer for the note to play. Seems like MIDI uses 21 for A0, so I'll have to translate everything down by 21. Velocity is how hard the note is struck at first, delay is specified as a fraction of a second, means how many times that line is run per second when placed in a loop. I don't fully understand how it works outside of a loop, but seems like that value must be the same, tied to the tempo of the whole orchestra.

MIDI.chordOff specifies the duration to play a note. The MIDI.chord__ methods are simply convenieince wrappers around MIDI.note__, to operate on multiple notes at once. MIDI.noteOff can be used to terminate individual notes of a chord played before at different times, or MIDI.chordOff can be used to terminate multiple ones at once.

Volume is set via:

MIDI.setVolume(<channel: int>, <volume: int>);

Should be straightforward to implement as a 3-layer loop -- for all instruments in orchestra, for all notes playing on that beat on that instrument, chordOn, then for all note in notes for that instrument, noteOff based on note value.

Feb 11

Got the note operations (add / delete / edit) on the grid fully working, all as invocations and with logical reverses (they can be fully undone/redone using the established stacks with no limitations). I had to implement a preview layer as well, since when the user drags the notes to lengthen/shorten them or when creating a new note, I didn't want the controller to call the method to set the note length multiple times and push multiple commands onto the undo stack. That took quite a bit of time as it involves collision detection as well - two notes cannot overlap. I had to work around another one of Backbone Modelbinder's limitations - binding multiple values to style will have them overwrite each other, unlike binding to class, so for the notes, since they are all free-floating, I had to use two elements - bind the outer div to top, and the inner div to height, and set them both to display: inline-block so the outer div takes the inner one's height.

Had to fix a couple of bugs in the end, visual and otherwise, but it's done. Next stage is to get the grid ops fully working (add / delete rows), and getting the instrument models fully finished. Currently the instruments are merely skeletons of values without a sound generator unit, and I still need to give them each a color selector - right now their notes all default to fuschia / maroon. Overall, very satisfied with today's progress. Basic playback implementation in MIDI.js should be able to be completed within the week, or the next by the very latest if I decide to prioritize other things or if some other project comes up on my radar.

Feb 10

Made some good progress on my research project. On this one, in what time I have left I searched around for good alternatives to Timbre.js (not that Timbre is bad - it's on the contrary, really), but I just wanted to have another look around to see what's out there. Found MIDI.js with a damn impressive demo, and a good quality soundfont library already encoded as a JSON base64 arrays that I'll try out tomorrow. There's so much potential for this tech. Still have to get that grid back up first though. Grah.

Feb 9

Got the grid display back up, but event bindings are not fully done yet, specifically those concerning playback and the note grid. First I have to get the pitch-based model right. Spent some time today tidying up the files and spiking a Java project to minify + concat, using the Closure compiler and YUI css minifier components. Looks promising enough, but I didn't proceed further as this is more important. Looks like the reconstruction's going to take at least one more day, but I have to take time out tomorrow to get some work done on my research project, so we'll see.

Feb 8

Everything's broken to bits since I'm halfway through refactoring as per yesterday's plan, but the models and views are shaping up nicely. I've modified the logo a bit as the relative positioning is preventing elements from being clicked on. Test-wise I've written unit tests for half the classes. Hopefully most of this'll be done by tomorrow or the day after. I've decided to not transpose the grid, since once I did the layout is much less intuitive - at least to me as a piano player. The main motivation for flipping it is so the user has more space to work with, but it's not worth the tradeoff as far as it stands now.

Oh yeah, almost forgot. I tried seeing if Epoxy.js is a better alternative than ModelBinder - and got mixed results. Mainly I did this because of the irksome problem in that ModelBinder requires an extra element when binding collections - you can't target whatever you insert as a template - its direct descendant is the highest level you can bind to. This means whatever I insert in the grid would have a dangling div somewhere. Hidden away, of course, but still, it's on the DOM. In a way, Epoxy's syntax is more terse binding models to views, but its main drawback is that if you want to bind to collections, it needs a one to one relationship between the collection and the view. This makes it an immediate no-go, as even just for the instruments, I need to bind it to both the grid and the instrument panel. Hence, back to ModelBinder it is.

Feb 7

Got the undo/redo stack done. There's a more pressing concern, however as the project is at a crossroads of sorts. See #Design consideration 02 - The music model

Feb 6

Had to take time out for a university research project of mine involving clustering queries in search engine logs. Anyway, regarding SynthJS, I looked at Grunt and other JS and standard build tools (Ant, Maven, Yeoman, etc.) to see if they had a one-click minify-concat etc. build option using a config file from within Eclipse, but came away disappointed that there isn't (or at least I couldn't make one work after an afternoon). At least I finally figured out how to make Eclipse outline the code correctly from a Stack Overflow post. Apparently the outermost anonymous function cannot self-execute. One must declare a {} prototype and execute the outermost function on a separate line -.-' mm.. Ok.., and apparently it doesn't work in Kepler, but I'm on Eclipse Juno.

I should probably start testing my models in QUnit before there's too many, although I have little to no idea about how to test the event bindings declared in Backbone Views. Actual work done, I whipped up a logo in CSS3 which should serve its purpose well enough for now.

Feb 5

Spent most of today implementing the planned capture layer and DOM optimizations. It was way more troublesome than I thought it would be, but the end result is more than worth it - everything is now buttery smooth. No jitters or delays whatsoever when doing most things I could think of, other than saturating the whole screen of multiple instruments with notes. I didn't know event.offset was optional -- firefox doesn't have it, so I had to use a fallback called event.originalEvent.layerX/Y. When I was doing all that, Opera started refusing to work for whatever reason. I spent a while trying to fix it, but seeing that Timbre.js uses Webkit/Firefox's audio API, I decided to leave it for the time being. But guess what, after everything's finished and the grid's polished up, Opera decided to work with the implementation again. Oh well, guess it doesn't like being left out =) Sure, it still doesn't play anything but I like to get everything in working order in case I want to port to the HTML5 audio framework later on -- I try to maintain quite a clean line of separation between my models and the audio generation suite.

The biggest problem was getting the listeners for each beat to rebind properly when users delete beats -- it took me several hours to figure that out but I've finally solved it. For all that work, the performance improvement is worth it.

Feb 4

Most of today is concerned with addressing performance and usability concerns. The easiest thing done today is getting Orchestra to automatically select an instrument when it has none whenever a new one is added. That took under 2 minutes. Done, boom, yay, whatever. The rest took a lot longer. Primarily this concerns the grid behavior. This is important enough to be covered at the top. See #Design consideration 01 - The grid. Also, Jenkins is feeling uncooperative and I ended up throwing away several hours because the package for Ubuntu (actually they have Debian/Redhat only) refuses to deploy. Has something to do with Winstone. Anyways I spent enough time on Google and troubleshooting already so I decided to write this up. Most of today's work is covered in the given link.

End of Jan - Feb 3

I'm writing this as a back post since I'm late like that (actually I spent most of the day trying to deploy Jenkins onto my Ubuntu instance on Amazon EC2 but the packages don't want to work, so I decided to do this instead and forgo CI / automatic deployment for the time being as I don't see a straightforward solution).

The project started before this but it was as a local hard drive copy. Ha. These few days were mostly initial project setup and the like. I looked through audio libraries to use and settled on Timbre.js, and used Music.js to generate the proper frequencies for the notes easily. Music.js is using the circle of fifths to do this - this would make sense to people familiar with music theory. Got started on the initial page layouts and used Backbone models. Main initial goal is to get the note grid functional since, you know, having it look real nice but only stare at you and not work makes one crazy. Okay, maybe just me.

The basic layout of the application is thus: There are a series of menus and elements, all elementId labeled for fast DOM lookup, and all of them hug that grid in the middle which is the centerstage. The user picks which note he/she wants to play at what time, and clicks on the appropriate square to register it. When he hits play, then the application plays it back.

Initially this grid is generated naively - one div per square, since I'm still having a refresher on Backbone.js etc. and I wasn't that concerned about performance considerations at that point although I'm aware that it wasn't performing as well as I'd like it to.

So, summary time, functionality-wise, up to Feb 3, I got the playback system working with rudimentary add/remove beats from the grid, and actually getting the grid to work.