Main Page

Hi, I'm Li! I graduated in August 2015 from University College London with MEng Computer Science with first class honours. Since then I've generally gravitated towards the research side of things, especially in applied machine learning and artificial intelligence. During university, I collaborated with my advisor, Prof. Emine Yilmaz, in topics related to search and advertising, semantic computation, and NLP, working with corpuses such as the BNC, YAGO2, WordNet, SentiWordNet. I'm currently a registered SCPD student under Stanford University's professional education programme, while employed as a research SDE in Singapore.

Why computer science? At first, I entered the field because I love building things. Stacks. Factories. Interfaces. Semaphores. Software are teeming cities running like clockwork on top of layers and layers of abstraction. I thought that I wanted to be a developer for sure, but then I began to see some really interesting problems and approaches to solving them in the field, so I focused my efforts on research too. Computer science (and AI / machine learning) is very much in the middle of interdisciplinary research, and I think this is where the most exciting things are happening. Before this, I was a medical student in Imperial College London - I left after two years - but that's a story for another time.

Projects

Bounding Out-of-Sample Objects (2017)

This was my project submission for Stanford University's CS231n Convnets for Visual Recognition.

Abstract

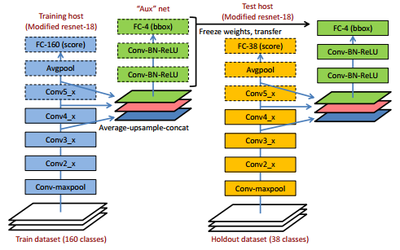

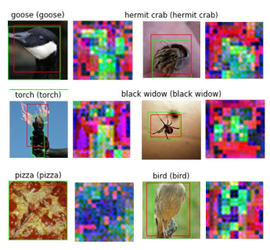

ConvNets have spatial awareness built into them by design, specifically in their feature maps or activation volumes. With respect to any task such as image classification, they are at the same time implicitly performing some form of localization of salient features. We want to understand how good this localization information is at annotating bounding boxes compared to ground-truth, especially around out-of-sample objects which the convnet has never been trained on. This requires a model which is indifferent to the class label, and thus it must operate on spatial information that is as general as possible, across as many image classes as possible. Additionally, we want to investigate whether this method could be adapted to different image classification architectures.

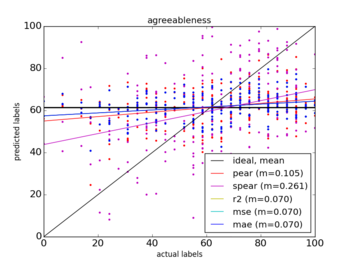

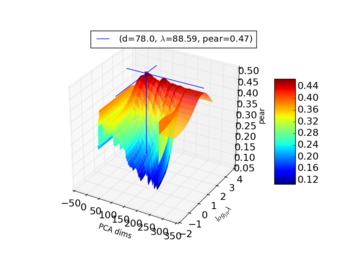

Predicting Personality from Twitter (2015)

- Final year MEng project: [ PDF ]

Abstract

We present a 6-month-long multi-objective two-part study on the prediction of Big-Five personality scores from Twitter data, using lexicon-based machine learning methods. We investigate the usefulness of models trained with TweetNLP features, which have not been used in this domain before.

In the first part of the study, we cast personality prediction as a classification problem, and we investigate how prediction performance is affected by different methods of data normalization, such as whether we divide each feature by word count. In the second, main part of our study, we cast it as a regression problem, and we investigate the differences in performance when we use ranks of scores rather than actual scores, and how filtering only for users with over a certain tweet count affects prediction performance.

We report on the different methods used in existing literature, explain background information about the tools we used, and look at the common evaluation metrics used in classification and regression problems and address potential pitfalls when calculating or comparing them. We also suggest a solution on how to reconcile learning parameters for different models optimizing different metrics. Finally, we compare our best results with those in recent publications.

Our main findings are that term frequency-normalized features perform most consistently, that filtering for users (>200 tweets) improves prediction performance significantly in the regression problem, and that prediction performance using ranked data is comparable to using actual values. We found that models trained with TweetNLP features have comparable or superior performance to those trained with LWIC and MRC features commonly used in literature. Models trained with both have superior performance. Compared against 15 recent models (3 papers, 5 personality scores), our best models are better at prediction than 11 of them.

IRDM project poster presentation (2015)

- Poster: [ PDF ]

This was a 4th year Information Retrieval and Data Mining course project about automatic document-tagging methods. The idea is extremely simple - we use named entity tags (e.g. Barack Obama, United States), RAKE algorithm-extracted keywords (e.g. candidate, poll, election), and WordNet domains information (e.g. political), to give readers a precise idea of an article's topic(s). From the given example tags alone, we can already be pretty confident that the target article is discussing the last one or two US presidential elections. The intuition is that the entity tags (proper nouns) give us specificity, the RAKE keywords (general, frequent terms) give us coverage, and WordNet domains information gives us a hierarchy to cluster similar articles together by topic.

We were awarded second-best for poster presentation.

SmartFence (2014)

- Please see #Microsoft Research Cambridge

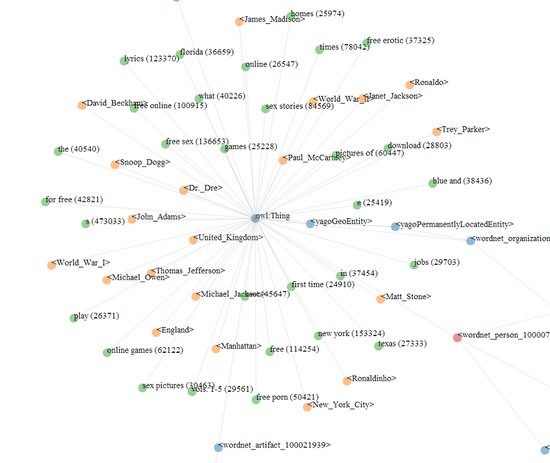

Task Identification using Search Engine Query Logs (2014)

This was my undergraduate university-based research project. The goal is to find out what users are most interested about when they search for a certain class of things. For example, using Google's related searches, if you search for Hawaii, it gives you results along the lines of "Hotels in Hawaii", or "Flights to Hawaii". Based on our understanding of how Google's system works, these are the most common strings appearing with Hawaii in searches.

We wanted to go one step further. By using a knowledge base like YAGO and the set of AOL logs leaked in 1997, we do the same, but with semantics. The idea is to lump searches like the ones above together with other searches of the same class, for example, with "Colleges in Hong Kong" or "Banks in London". These searches are similar because they are queries related to a place, and by aggregating all these searches together, for example, we can find out what users want to find out about most, when they query for places. First, we build up a class tree using the YAGO ontology. An example of a branch would be: Root --> Person --> Artist --> Musician --> Singer. Then, we add entities to each of these classes based on rdf triples expressing it, like ElvisPresley hasClass Singer. So, when we find a search string referencing the entity ElvisPresley, we remove the entity from the string and map the remainder to the class the entity belongs to, as well as all the ancestors of that class. For example, the search string Biography of Elvis Presley would map the string Biography of to the classes Root, Person, Artist, Musician, and Singer. Hence, by querying nodes of this tree, we can find out the most popular tasks users want to do when searching for any class of things. I thought this was an extremely interesting problem to tackle.

AOL logs come from real data, and real data is messy. It was full of typographical errors, machine-issued queries, and foreign languages, keywords unsafe for minors, etc. To give an idea of what we did to get useful data out of it, first, we ran all the searches through a simple regex sieve to filter out nonsensical queries (only symbols, URLs), or machine-issued queries, which tend to be very long. Then we grouped the related searches together - this is called search session segmentation (we used Levenshtein distance, tri-grams, and a cutoff of T=26 minutes). Then we ran Porter-Stemmer. The Porter-Stemmer algorithm reduces English words to their root word, so we can match it against the entities in the YAGO knowledge base. We also had to think about greedy matching. Consider the string New York Times. We want the entire string rather than matching New York and times.

We also ran into problems of ambiguity (this is called word-sense matching in NLP) - for example, Java may mean the programming language, or the place in Indonesia, or a dozen other things. We disambiguate by comparing the number of similar classes terms belong to. For example, if a search session contains the terms Scala and Java, we can be sure that Java means the programming language. The problem is if we only have one entity matched in a session, we cannot disambiguate because we don't have another entity to compare it against, so we ended up discarding many such sessions, so the remaining data only managed to populate the tree up to around the third layer. That was as far as we got in three months, but after I delivered the presentation, the project was awarded best undergraduate research group project of the year.

Full-time positions

Research Software Engineer at Ocean-5 Technologies

Ocean-5 is an engineering firm specialized in underwater submersibles for use in marine research and in the oil and gas industry. I joined the company as they were just about to diversify into designing agricultural machinery. My team is currently designing and building a vehicle prototype and an associated plough assembly for use on special terrain.

I work closely with electrical and mechanical engineers, and my responsibilities span everywhere from the remote web interface for the vehicle fleet, to the Python server backend, GPS integration, and all the way down to programming the microcontrollers (in embedded C) sitting on the printed circuit boards interfacing with the vehicle hardware itself.

As this is an actual vehicle, the work has had to conform to many more specifications than I'm used to, and, because the software is actually controlling heavy hardware at the lowest level, end-to-end testing can get quite tricky. Even concurrency becomes an altogether different ballgame, because there are no threads to speak of, as there exists no operating system. All we have is a piezoelectric crystal on the circuit board that acts as a system clock. So far the going has been pretty interesting, as I get to work at the highest level of abstraction to the lowest and everything in between, and I'm also picking up skills from other engineering disciplines.

R&D Scientist at Digital:MR

Digital:MR is a market research company, and I joined them for a short-term research project, which was funded by InnovateUK, the UK's technology strategy board. Chris, who I collaborated with on my final year project, introduced me to the company and the project, and he had already been with the company for several years.

The project was a feasibility study on identifying (context-free) sentiment from images. While the project proposal, timeline, and grants were handled by a previous employee (who left the company), I took over the rest of the project, which was about 3 months long, including the R&D, documentation, and writing the monthly and final progress reports to the project supervisor from the government. While I had limited discussions with other employees involved in R&D, because Digital:MR is a small company (<10 people), it was made clear from the outset that this was mostly going to be a solo research project where I was responsible for the methodology, implementation, and results.

While a non-disclosure agreement prevents me from talking in-depth about the results and methodology, I used the Yahoo! Flickr Creative Commons 100 Million Images (Yfcc100m) dataset and its pre-extracted feature descriptors from YLI, and essentially applied the most straightforward methods known to me, as the project needed to finish on time.

What I found most interesting about the project was that the difficulties laid in unexpected areas. For example, because the field is new, there are inconsistencies in how to interpret the problem. Without going into detail, based on how images are tagged on Flickr, what we have is a multi-label classification problem. Some researchers (including authors of a AAAI 2015 paper) had simplified the problem to single-label classification when using Flickr's search API to compile their dataset, which I found as unjustifiable based on label distributions in Yfcc100m. During the process, I also found and documented a behavioural anomaly when the widely-used Natural Language Toolkit (NLTK) interacts with the WordNet3.0 and SentiWordNet corpora. On top of that, I had to tailor on-the-fly, some high-level decisions towards addressing various potential applications in the context of the company's clients, which were not objectives in the original research proposal. Along with reading the literature on image representation and machine learning on images, which I was relatively unfamiliar with in general, it all adds up into a very interesting learning experience indeed.

After the project ended, while my agreement with the company was full-time and I was welcome to take over one of several research projects, including drafting a new grant proposal based on results of my research, I had decided to pursue my own interests by then, and we parted ways amicably.

Internships

Microsoft Research Cambridge

I joined Microsoft for 8 weeks as Research Intern through the Bright Minds Internship Competition programme for undergraduates. I was supervised by Pushmeet Kohli and Yoram Bachrach, with limited help from Ulrich Paquet and Filip Radlinski.

Since the allocated time is short, I was told about the problem and given a direction to start off on - it was about parental / access control of the internet. There were several problems in this area which we hoped to address:

- Traditional access control relies on black and whitelists of URLs. For instance, OpenDNS offers a service where they simply refuse to resolve blacklisted domains. However, domain ownership changes constantly and the lists have to be updated to reflect this.

- A site is either blocked or not, based on a fixed criterion, and there is no customization of the partitioning. For example, if a company wants to block Facebook at work, they couldn't use a service which has it in the whitelist. You could customize the list, but if one generalizes the requirement to "I want to block all social media sites", that's not possible if there is no such list.

- Users can only recognize a tiny fraction of sites in either list.

We wanted to try a different method. The project was called SmartFence - users block or allow the sites they know about to train the system, and the system determines the suitability of the rest of the sites automatically. First, we make the assumption that users tend to visit websites which serve the same information need in a session. Hence, websites belonging within a search session in Bing are said to be correlated, and we transform this correlation data into 30-dimensional feature vectors. We then construct a giant similarity matrix of websites based on these vectors. For our investigation, we constructed it in-memory for the top ~10k sites (by frequency of visitation) rather than for everything. When users block or allow the sites which they recognize, we label these -1 and 1 respectively (this becomes our training set). For any site which a user does not recognize, its score is calculated based on its similarity to all the other labelled sites (this is our hypothesis' output). We used a matrix primarily because the we need to be able to compute the partitioning quickly when the user changes the labelled set of websites or weighting decay function and cutoff.

We initially performed k-means clustering to investigate how the clusters would look like with different values of k. We wanted to see if these partitions made sense. Then we had a brief foray into reducing the vectors to two-dimensional space (bearing in mind information loss) so the user can draw out areas on the screen which they want to block or allow. I also briefly considered partitioning by Voronoi cells while working in 2D (because running convex hull becomes rapidly infeasible at higher dimensions). We finally abandoned hard cluster boundaries altogether, and settled on a kernel method. This meant that initially, every site is related to every other (a fully connected graph), and we cull the arcs by setting a minimum similarity threshold, and we control the partitioning by setting the value needed for a site to be blocked.

I delivered a working prototype in 4 weeks for the internal company hackathon, and a refined version with a web front-end (knockout + D3) at the end of the internship. I didn't have any exposure to machine learning before the internship, but thankfully, I managed to pick up the basics on-the-fly. The period before the hackathon was particularly intense, because we have to get a basic algorithm and interface going in just four weeks. My previous experience in web development meant that I could rapidly iterate multiple versions of the GUI to get something intuitive to what our algorithm was trying to do. Dr. Radlinski took particular interest in the GUI implementation - he said it was more impressive than what one would usually see at a conference.

This was as good an internship as I could have ever hoped for. I got to see how it is like to work in industrial research, I began to understand how powerful machine learning is, and what inventive things other researchers are doing with it, and most of all, I got the chance to work with some really fantastic people. I was trying very hard not to be the stupidest guy in the room - I was an undergraduate amongst PhDs and postdocs and people with decades of industry experience, but the guidance and support I received was second to none. It was awesome!

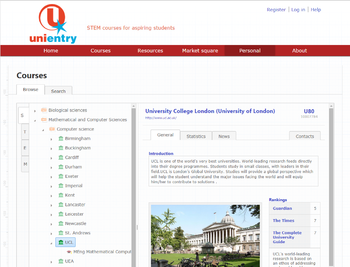

UniEntry

UniEntry is a startup company which I worked for in summer 2013. The problem niche it tries to solve is that in the UK, it is very difficult to make informed decisions when selecting universities. Rankings are not the only thing that matters - the atmosphere of the institution, the faculty, the focus and quality of research of the individual institutions etc... much of this information is not available in the prospectuses. I remember the experience selecting a UK university when I was still in my A levels as an international student - it felt like a shot in the dark. I was fortunate enough to be attending a college with excellent admissions tutors, so I had the benefit of counselling and advice on relatively obscure facts (like Gonville and Caius college in Cambridge University was thought to be the best one for medical studies, for example; in Cambridge University, students belong to individual Colleges, and while they all attend a common lecture, supervisions are on a college-to-college basis). Not every student is fortunate enough to have access to this kind of coaching, and even when I did, I felt like the choice was still not as informed as I would have liked it to be.

Enter UniEntry. It is founded as a part time venture by two individuals, who hired myself and another developer to develop a pilot site over the summer. UniEntry pulls information from the Higher Education Statistics Agency to bypass potential bias in the university prospectuses, to make the application process as transparent as possible.

While guest users can browse the courses, much of the platform is actually inaccessible to them. Registered teachers can monitor their students' applications and university choices, students of participating schools can register their grades and the platform will inform them about whether their choices are a good fit for their capabilities, and university undergraduates can register as coaches to guide and inform students and teachers.

The pilot site is supposed to be used as a proof of concept to get schools to be involved and to look for funding. We used the agile development model, so we had daily stand-ups, sprint planning, progress burndown charting, the works. The other developer (Wayne) and myself just went through the application process ourselves, so the two of us had great influence on what features a site like this should have to be useful. The site was developed in ASP.NET, and I was the primary front-end developer and designer. This was my first time working in a small start-up company - each one of us had multiple roles - planning, front-end, back-end, database, office boy, manual work etc. We had lunch together to discuss concepts or the project, and we had to help each other out on a regular basis. We did pair programming, we audited each other's code. It was a really close-knit team. Wayne initially came from an electrical engineering background, so I brought him up to speed on C#, polymorphism, generics, common design patterns and MVC in general. I love teaching and explaining things, so I enjoyed it and learned from it as much as he did.

After the initial three months, I maintained contact with the company and submitted bugfixes when requested. Right now there's not much progress being made because the CEO (Will) is busy with his own research projects. However, I think it is a project with a worthy cause - even in its current state as a pilot site, it's already useful even to guest users. I think I would have benefited from it when I was applying to universities myself, so I certainly hope it gets the funding it needs to be completed at some point.

P.S. At the end of the internship, Will brought us all for a helicopter ride around London as a surprise treat for a job well done!

Other

My other experiences are related to my brief stint in medical school rather than computer science, but I consider them to be valuable and one-of-a-kind.

- Work shadowing in van Andel Institute, Michigan, USA. I generally observed the environment and working atmosphere within a biomedical research lab. I learned about what the researchers do on a regular basis, things like automatic sequencing, running DNA microarrays etc. I learned how they made knockout mice (mice with certain genes deactivated) to study its effects, and how they highlight sections of DNA by binding highly specific fluorescent molecules to them, using techniques (with really fancy names) like spectral karyotyping and fluorescent in-situ hybridization. Looking back at my experiences in the lab, it always reminds me of how different the nature of the work in the various fields of science can be.

- In Malaysia, I had a work placement in a hospital's critical care unit and department of anaesthesia. I remember how meticulous everything was - the cleanliness precautions we had to take, the nurses charting the patient's statistics every few hours, etc. Later on, I was attached with the Department of Public Health of Penang, and we went off checking the safety of water supplies and fogging areas with reported cases of dengue fever.

Personal interests

I've been studying Japanese on my own in my free time for a while now. Initially I was simply looking for a target language to work with machine translation, because the grammar is unusual compared to the other languages I knew. I went off to language school in Hokkaido for a month back in September 2014, as the best place to learn a language is in its country of origin. The trip was absolutely eye-opening.

I can speak, listen, read and write in four languages:

- English

- Mandarin (and certain dialects)

- Japanese (N1)

- Malay

On the music side of things, I hold ABRSM grade 8 certification for piano and theory of music, and I play the piano and the erhu and gaohu (Chinese viola and violin) as I used to be student instructor and lead player for the erhu in our Chinese Orchestra society back in high school.

My favorite book is Antoine de Saint-Exupery's Wind, Sand and Stars (memoir)

To contact me, please use the information in my resume.

~ Thanks for visiting! ~